How to Make Wiser Decisions

Introductory Note: Current research in the laboratory at the University of San Diego, California, conducted with Dr. Stephanie Stolarz-Fantino, centers on human decision-making, especially non-optimal, illogical and impulsive decisions. We also study choices involving the distribution of resources including an experimental analog of altruism as well as human problem-solving and reasoning. We find that all of these decisions may be best understood by a thorough appreciation of the historical context to which the decision-maker has been exposed.

Decisions, Decisions!

who are putting us on, or by imbeciles who really mean it.

–Mark Twain

Not all decisions at the executive level are flawed, of course, and not all bad decisions are made by executives, but even some of the best decisions seem to have been made by happenstance, luck, or what some people call “instinct.” Many people believe that the ability to make good decisions in a leadership role is not shaped so much by the environment but hardwired into the brains of those who end up leading our corporations. In a somewhat shortsighted approach many authors who write about leadership and decision making assume that a history of making good decisions (even a very brief history) and the events that occur as a result of such “good decisions” actually predict that good decisions will continue in the future. Therefore, once someone in a leadership role has made several successful decisions, then that person is imbued with the label of good decision maker. In reality, research tells us that decisions should not be judged as worthy until they are put to the test of how they affect the decision maker’s future actions.

Shifts in social and economic fortune, the winds of war, or a change in our culture are all results of decision making at some level. Still, as one pundit said, “Bad decisions make good stories.” If we simply watch the evening news we might observe that people have a tendency to make poor decisions. Current research verifies that observation but also provides practical implications about poor decisions and their causes. In fact, behavioral scientists have discovered that poor decision making is often the result of the misapplication of rules and principles that have led to effective decisions in the past. For example, we may think that always buying the large, “economy size” of a product is the best buy, or that a package option for a concert series is a better buy than purchasing tickets separately for each event, when actually neither is always the case. In other words, the principles that we have acquired from a lifetime of experience enable us to make rapid and efficient decisions, but when those experiences don’t actually apply, they lead us astray.

Proof in the Pudding

Decisions are also determined by a phenomenon that Hal R. Arkes and Catherine Blumer call the “sunk cost effect,” meaning that people are often more influenced by what they have already invested than by factors that should determine the appropriate action. This tendency can be attributed to ongoing lessons of “Waste not; want not.” Interestingly, even economics students who are well-versed in the sunk-cost effect concept fall into the same trap. When confronted with a situation in which they have invested more in one choice than in another, they opt for their less-preferred choice if they have made more of an investment (usually financial) in that choice. The takeaway lesson here is that training does not necessarily immunize one from making irrational, illogical, or otherwise optimal decisions.

Decisions are also determined by a phenomenon that Hal R. Arkes and Catherine Blumer call the “sunk cost effect,” meaning that people are often more influenced by what they have already invested than by factors that should determine the appropriate action. This tendency can be attributed to ongoing lessons of “Waste not; want not.” Interestingly, even economics students who are well-versed in the sunk-cost effect concept fall into the same trap. When confronted with a situation in which they have invested more in one choice than in another, they opt for their less-preferred choice if they have made more of an investment (usually financial) in that choice. The takeaway lesson here is that training does not necessarily immunize one from making irrational, illogical, or otherwise optimal decisions.

Under what conditions do people seek information? Surprisingly, humans are not the enthusiastic information seekers that many of us might think. How well do we apply information after we have acquired it? This question leads to the fascinating world of illogical fallacies developed by specialists in judgment and decision making. A behavioral approach helps to understand these fallacies and human choice, enabling us to gain a fuller understanding of decision making and the conditions that surround and predict the likelihood or success or failure. The science tells us a lot about where good decisions come from, whether we are good or bad at decision making, and if good decision making is a skill that can be taught and honed.

No News is Good News!

In a series of experiments involving a number of species—including humans—behavioral scientists observed that many species will seek information despite the fact that doing so produces no immediate change for the subject, in terms of immediate reinforcement. This, of course, leads to the basic question of “Why does this occur?” Several theories were formed to answer that question, roughly stated as follows:

In a series of experiments involving a number of species—including humans—behavioral scientists observed that many species will seek information despite the fact that doing so produces no immediate change for the subject, in terms of immediate reinforcement. This, of course, leads to the basic question of “Why does this occur?” Several theories were formed to answer that question, roughly stated as follows: - Information serves as its own reward, because the information may prove profitable for future use.

- Seeking out information is sometimes associated with positive outcomes and a higher likelihood for faster reward. It is this occasional good news that supports information seeking.

In 1972, Robert Bloomfield captured the critical difference between the two theories when he noted that, according to the first view, a subject would pay attention to both “good news” and “bad news” (since both are informative), while according to the second view, only “good news” associated with a positive outcome would maintain a subject’s attention. As it turns out, the preponderance of the evidence suggests that people attend to bad news only when the bad news has some usefulness, a finding that supports the second view. However, people prefer “no news” to bad news when the latter cannot be utilized. In other words, it is unlikely that many of us would want to know something dreadful was about to happen if we could do nothing to forestall it.

The “take-home message” here is that we cannot assume that individuals will absorb, or even pay attention to information that might prove valuable in present or future decision making. Instead, we appear to treat bad news as something to be avoided. Apparently, compared to “bad news,” “no news is good news”—a phenomenon that also may clarify the historical success of “Yes Men!”

Using Information Logically . . . or Not!

If humans appear to be more interested in the favorable affect of “news” than in its informative value, how do they behave when confronted with conflicting information? Several types of “fallacy problems” that negatively impact optimal decision making have been identified: (1) our response to compound statements called the conjunction fallacy; (2) a concept called base-rate neglect; (3) a problem-solving tendency called probability matching or learning; and (4) the previously discussed sunk-cost effect.

If humans appear to be more interested in the favorable affect of “news” than in its informative value, how do they behave when confronted with conflicting information? Several types of “fallacy problems” that negatively impact optimal decision making have been identified: (1) our response to compound statements called the conjunction fallacy; (2) a concept called base-rate neglect; (3) a problem-solving tendency called probability matching or learning; and (4) the previously discussed sunk-cost effect.Repeated studies by behavioral scientists Amos Tversky and Daniel Kahneman revealed that when people were supplied with a compound statement, they made decisions based more on personal intuition than on fact. For example, in certain contexts subjects judged the separate statements “Bill is an accountant” and “Bill plays jazz for a hobby” as less likely to be true than the compound statement “Bill is an accountant and plays jazz for a hobby.” Many such examples demonstrate that people make intuitive decisions based on the very form in which facts are presented.

Scores of subsequent and similar studies in which clear instructions were given to make judgments in terms of probability and not simply in terms of intuitive appeal, actually resulted in a larger proportion of subjects exhibiting this tendency, called the conjunction fallacy. Even high-achieving students with explicit training in logic demonstrated the fallacy!

Why does the conjunction fallacy occur? One possibility considered was that the subjects studied were not sufficiently motivated to take the experiments seriously. Consequently, researchers provided feedback regarding the logical correctness of subjects’ responses, and offered a handsome monetary reward just for answering correctly. Neither the feedback nor immediate monetary reward improved performance, a finding that might appear ironic in view of some of the exorbitant salaries and bonuses offered to corporate executives.

A second possibility for making decisions that reflect the conjunction fallacy is that subjects may respond to compound statements by assessing the reasonableness of each part of the sentence, averaging the assessments, and coming up with the likelihood of the compound. Research shows that this averaging is a commonly used tool for integrating multiple pieces of information in decision making.

Overall, research on the conjunction fallacy suggests that it is a robust phenomenon, as mentioned previously even occurring in highly educated subjects, including those with training in logic. It is likely that the fallacy depends, at least in part, on a tendency to average the likelihood of possible outcomes we need to incorporate our decisions. This tendency to average often serves us well in integrating information when we are making judgments. However, this averaging of compound information is inappropriate for some problems and therefore can persuade us to misapply learned rules that lead to bad and incorrect decisions.

No doubt, the research of Tversky and Kahneman is highly complex, but they neatly summarize the problem that it presents as follows:

Uncertainty is an unavoidable aspect of the human condition. Many significant choices must be based on beliefs about the likelihood of such uncertain events as the guilt of a defendant, the result of an election, the future value of the dollar, the outcome of a medical operation, or the response of a friend. Because we normally do not have adequate formal models for computing the probabilities of such events, intuitive judgment is often the only practical method for assessing uncertainty. . . . Because individuals who hold different knowledge or hold different beliefs must be allowed to assign different probabilities to the same event, no single value can be correct for all people. Furthermore, a correct probability cannot always be determined even for a single person. . . . We do not share Dennis Lindley’s optimistic opinion that “inside every incoherent person there is a coherent one trying to get out,” and we suspect that incoherence is more than skin deep.

Gary N. Curtis, Ph.D., on his Web site, www.fallacyfiles.org, explains the conjunction fallacy as follows:

The probability of a conjunction is never greater than the probability of its conjuncts. In other words, the probability of two things being true can never be greater than the probability of one of them being true, since in order for both to be true, each must be true. However, when people are asked to compare the probabilities of a conjunction and one of its conjuncts, they sometimes judge that the conjunction is more likely than one of its conjuncts. This seems to happen when the conjunction suggests a scenario that is more easily imagined than the conjunct alone.

Interestingly, psychologists Kahneman and Tversky discovered in their experiments that statistical sophistication made little difference in the rates at which people committed the conjunction fallacy. This suggests that it is not enough to teach probability theory alone, but that people need to learn directly about the conjunction fallacy in order to counteract the strong psychological effect of imaginability.

In other words, simply being aware of the human tendency to commit conjunction fallacy may help us consider its implications before rushing into snap decisions. As Charlie Chan said at the racetrack, “Hasty conclusion like toy balloon: easy blow up, easy pop.”

Base-Rate Neglect and Probability Matching: Pay No Attention to that Man Behind the Curtain!

You have to have doubts. I have collaborators I work with.

I listen and then I decide. That's how it works.

A series of complex studies on judgment and decision making point to another, apparently very human phenomenon which scientists call base-rate neglect. The details of such experiments are highly intricate, but the outcome basically states that when assessing the probability of a future event, people often ignore background information in favor of case-specific information. Comparison studies using pigeons and humans indicate that this propensity to apply rules, often learned since childhood, could be a completely human tendency. In other words, because of our learning histories, we apply those histories to problem solving even when some facts indicate otherwise.

A series of complex studies on judgment and decision making point to another, apparently very human phenomenon which scientists call base-rate neglect. The details of such experiments are highly intricate, but the outcome basically states that when assessing the probability of a future event, people often ignore background information in favor of case-specific information. Comparison studies using pigeons and humans indicate that this propensity to apply rules, often learned since childhood, could be a completely human tendency. In other words, because of our learning histories, we apply those histories to problem solving even when some facts indicate otherwise.Humans as Problem Solvers

When humans are presented with identical choices, each associated with constant payoff likelihood, they tend to match their choices to the arranged probabilities instead of maximizing their payoffs by always choosing the outcome with the higher likelihood of reward. This means that even when people know that one outcome pays off 75 percent of the time (and the other outcome, therefore, pays off 25 percent of the time) they tend to choose the superior outcome 75 to 80 percent of the time rather than every time. Researchers call this rather odd practice probability matching and speculate that it occurs because human beings judge such tasks as problems to be solved, maybe even odds to be beaten. This gives insight on why probability matching occurs and how it might even be eliminated.

When humans are presented with identical choices, each associated with constant payoff likelihood, they tend to match their choices to the arranged probabilities instead of maximizing their payoffs by always choosing the outcome with the higher likelihood of reward. This means that even when people know that one outcome pays off 75 percent of the time (and the other outcome, therefore, pays off 25 percent of the time) they tend to choose the superior outcome 75 to 80 percent of the time rather than every time. Researchers call this rather odd practice probability matching and speculate that it occurs because human beings judge such tasks as problems to be solved, maybe even odds to be beaten. This gives insight on why probability matching occurs and how it might even be eliminated.

It appears that humans, not content with being correct most of the time, as they would be by choosing the more likely payoff, have learned that there generally is a “solution” that will always lead to a payoff. Non-humans don’t have these expectations and perhaps that is why they choose optimally: pigeons and rats always select the more likely payoff.

My colleague, Ali Esfandiari, and I hypothesized that people in probability-matching tasks will typically seek a way to be correct 100 percent of the time, so we informed subjects that the best they could do on a series of trials was to be correct 75 percent of the time. The experiment was partially successful in reducing the likelihood that the subjects would still choose the less than optimal choice in hopes of reaching 100 percent accuracy. This indicates that more optimal choices can be eventually attained over a number of trials without actually telling people that they should choose the more likely outcome on every trial. However, in real life, how many bad decisions does that entail? The answer is quite a few! (But of course, since everyone has been a parent and/or a child at one time, we know that even telling people the choices they should make often doesn’t make a difference either.)

Another treatment that enhanced decision-making performance in probability matching involved asking participants to advise others on how to approach the task. This had the effect of encouraging the participants to reflect on the contingencies and once they articulated a more effective strategy for others to follow, they were more likely to use the same strategy themselves.

The Web page www.wisegeek.com referring to base-rate neglect and how humans make the mistake of deviating from probability theory, explains the problem as follows:

These deviations occur because humans are often forced to make quick judgments based on scant information, and because the judgments which are most adaptive or rapid are not always the most correct. It appears that our species was not crafted by evolution to consistently produce mathematically accurate inferences based on a set of observed data.

For example, in one experiment, a group was asked to assign probable grade point averages (GPAs) to a list of 10 students based on descriptions of each student’s habits and personalities. Poor habit/personality descriptions coincided with predicted poor grade scores and vice-versa. Even though the group was also given information on students’ academic performance based on probability theory, that information did not appear to affect the GPA judgments. Instead, the group estimated GPAs according to their judgment of habits and personalities. Researchers concluded that “these skewed probability estimates occur every day in billions of human minds, with substantial implications for the way our society is operated.”

Insanity is doing the same things again and again expecting different results.

–Albert Einstein

As an airline company president, you have invested $10 million of the company’s money in a research project with the purpose of building a plane that cannot be detected by conventional radar—a radar-blank plane. When the project is 90 percent complete, however, another firm begins marketing a plane that cannot be detected by radar. Also, it is apparent that their plane is much faster and far more economical than the plane your company is building. The question is, “Should you invest the last 10 percent of the research funds to finish your radar-blank plane?”

Experimenters found that 85 percent of participants in this hypothetical study opted for completing the project even though the completed aircraft would be inferior to one already on the market. Among a corresponding group of research participants given the same problem but without mention of the prior investment (that is without mentioning the “sunk cost”) only 17 percent opted to complete the project. The explicit sunk cost of ten million dollars made all the difference!

While this result is consistent with the hypothesis that the participants are trying to avoid “waste,” it is also consistent with an alternative hypothesis, known as “self-justification.” According to this view, we persist in a failing action because we are justifying our previous decision (for example to spend $10 million on developing the aircraft). To decide not to complete the project might be construed as an admission that we should not have embarked on the project in the first place. Some research shows that the sunk-cost effect is just as persistent in groups as it is in individuals, leading to speculation about its contribution to the downfall of past human societies.

In fact, studies suggest that there are many cases where self-justification provides a more compelling account than waste avoidance. Anton D. Navarro’s research shows that sunk-cost effects are minimized when the nature of future gains and losses are made more transparent to the decision maker. However, in the world of business, who will step up to make such facts transparent? Keep in mind the previous findings that to most people “no news is good news.” A wise employee might also remember a well-known phrase about killing the messenger.

Killing the Messenger

If self-justification is implicated in particular cases of the sunk-cost effect then we might expect that the effect would be exacerbated if the situation emphasizes the personal responsibility of the decision-maker. Two studies support this view. First, a series of studies by Sonia Goltz and her colleagues has demonstrated persistence-of-commitment in a task involving investment decisions. In one experiment Goltz compared the degree of maladaptive persistence in participants who believed that they alone were responsible for making the company’s investment decisions with the degree of maladaptive persistence in participants who believed that their advice was pooled with that of other advisors in arriving at the final decision. Those who felt individually responsible persisted significantly longer. A second study, one of several conducted by Navarro, illuminated the factors governing sunk-cost behavior. Navarro found that when participants had chosen to engender the costs, they persisted significantly more than did participants for whom the costs had been imposed. Thus if you increase personal responsibility, you increase susceptibility to the sunk-cost effect.

If self-justification is implicated in particular cases of the sunk-cost effect then we might expect that the effect would be exacerbated if the situation emphasizes the personal responsibility of the decision-maker. Two studies support this view. First, a series of studies by Sonia Goltz and her colleagues has demonstrated persistence-of-commitment in a task involving investment decisions. In one experiment Goltz compared the degree of maladaptive persistence in participants who believed that they alone were responsible for making the company’s investment decisions with the degree of maladaptive persistence in participants who believed that their advice was pooled with that of other advisors in arriving at the final decision. Those who felt individually responsible persisted significantly longer. A second study, one of several conducted by Navarro, illuminated the factors governing sunk-cost behavior. Navarro found that when participants had chosen to engender the costs, they persisted significantly more than did participants for whom the costs had been imposed. Thus if you increase personal responsibility, you increase susceptibility to the sunk-cost effect.Escalation of Commitment Gone Wild

Taurus IT Project – An attempt by London’s Stock Exchange to implement an electronic share settlement process. The project started in 1986 and was expected to take three years and 6 million pounds to complete. The project was finally scrapped in 1993 at a loss of nearly 500 million pounds.

This effect occurs in a variety of tasks and such studies provide evidence that past reward history may influence decision-makers' persistence under failure conditions. Goltz, for example, tested persistence in a simulated investment task in which she manipulated subjects' past experience of the success or failure of their investments. Subjects received returns on their investments (success) in one of two investment alternatives per one of the following schedules: (1) on every trial; (2) on every other trial; or (3) unpredictably, but averaging out to be on one of every two trials. When conditions were later altered such that the investment alternative continuously failed to pay off, those subjects who were exposed to the least predictable schedule persisted in investing significantly longer than those in the two other conditions. Goltz also found increases in persistence if subjects' past histories included higher rates and greater magnitudes of reward. All of these results are consistent with a behavioral view of the importance of reward history for understanding persistence of commitment.

It is important to note, however, that the sunk-cost effect isn’t always about money. The cost may also be perceived as investment in time and personal effort as in sticking with a career that you hate because you put forth so much effort in your studies at college or you can’t let a bad relationship go because you’ve invested years of your life trying to make it work.

To know that moving on is an option, to consider actively your patterns of persistence in doing something because of the already expended effort, to start over in the moment—all those things are good advice. To act differently requires a different set of sustainable reinforcers—a different habit to build. As behavior analysts, the issue is that you, the person trapped by time and effort or the almost done feeling—may have tremendous difficulty moving on, but there are behavioral strategies that can help you do so. Moving on does not happen just by saying “Do it!”—arrange to get help to do so. Do not rely on your ability to step aside after months or even years of persistence. Remember there are ways to return to an activity with a clearer head once you have found a way to break away. We find that advice that tells you to stop clinging disregards the tremendous power of positive reinforcement you receive from continuing to cling. Alternative sources of reinforcement are often needed in order to make the break, and sometimes punishment for continuing is required for what is often called addictive behavior.

Making Good Decisions

what their strengths are, where they need to improve,

and where they lack knowledge or information.

–Peter Drucker

Raising the ire of most Americans, auto execs hopped on their expensive private jets to beg for taxpayer money before the Senate. This is the way they always did business in the past—big show, big impression—this time the wrong impression! Northern Trust Bank, among many similar examples, (after being bailed out by the billions), threw a series of extravagant parties, events, and concerts in Los Angeles for clients and high-ranking employees. Despite the fact that the bank had previously laid off 450 employees, a spokesman for the company stated that the money for lavish parties and giveaways was already in the company’s operating expenses prior to the bailout and defended the spending as a successful PR strategy in the past—business as usual.

Though such examples are shocking and we would like to think of them as rare examples of extraordinarily poor decisions, research findings don’t paint a rosy picture in terms of human decision making. However, though research helps us understand much about poor decisions, we should acknowledge that the analysis of decision making remains incomplete. Yet we do know that people are not always seekers of information (the observing literature) and they often do not utilize information in a logical (conjunction fallacy, base-rate neglect) or optimal fashion (base-rate neglect, probability matching/learning). In addition, these examples of non-optimal decision making are all strong, complex, and prevalent in human behavior. Hopefully, awareness of these fallacies can help us to better appreciate the extent to which, and the conditions under which, we seek and effectively utilize information in our everyday decisions. If decision makers use this information for nothing more than to understand that a “talent” for making optimal decisions may be highly serendipitous, they could possibly avoid or at least make fewer decisions based on the misapplication of learned rules and self-deception.

If you find yourself frequently saying (or thinking) the following statements, you may need to improve your decision-making skills:

- I can evaluate a situation in five minutes and make a decision.

- Good decision-making is a gut reaction, an intuitive talent.

- I don’t want anyone to think I made a mistake.

- Never second-guess yourself.

- I will listen to your input, but I’ve already made up my mind.

- People who point out the negative possibilities are just troublemakers.

- We’ve always done it this way.

- If it worked then, it will work now!

- Don’t ask those outsiders; they don’t have any stake in this decision.

- We’ve already put too much time and money into this to quit now.

- I know his/her type.

A Caution to the Reader

Be aware of your own limitations and surround yourself with others who understand the fallacies and the likelihood to practice imperfect approaches to decision making—something that is often viewed as an intrinsic ability rather than a skill to learn and refine. Keep in mind that a good decision is judged in many circumstances after the fact. Many of the big decisions recorded in history or praised in terms of the excellence of certain CEO decision makers, for example, were often described as broad in scope and the variables that really led to behavioral success or failure for the corporation were then attributed to the decision maker. The many factors of success often happen along a continuum far removed from the original choice and sometimes in spite of that choice!

Another factor is that one choice may have led to a series of actions that look good—but we do not know if they could have been better. Be ready to do post-decision debriefs on what you and others judge to be “the best decision ever” and see if in the chain of events triggered by that decision, improvements could have been made along the way. You can learn to institutionalize checkpoints for decisions made as they ripple out. Careful modifications in plans are a sign of being responsive to ongoing data.

For those of you in positions where decision making is part of why you were chosen for the role you have, manage with modesty and consider carefully any personal blinders that you may have. Take the principles here to heart and consider each new day as your Ground Hog Day of decision-making. Gain fluency in the factors of flawed decision making and broaden what you acknowledge and to what you attend. Remember that good decision making is a continuous learning event.

Now What Do I Do?

–Dwight. D. Eisenhower

Now that you’re aware of the possible pitfalls in making decisions, don’t lose confidence! Remember that knowledge is power. You can begin to hone your decision-making skills by simply remembering the common elements of flawed decision making discussed in previous chapters. You may, for example, incorporate the following initial process steps into your decision-making activity:

Now that you’re aware of the possible pitfalls in making decisions, don’t lose confidence! Remember that knowledge is power. You can begin to hone your decision-making skills by simply remembering the common elements of flawed decision making discussed in previous chapters. You may, for example, incorporate the following initial process steps into your decision-making activity:- Seek out and seriously examine conflicting information.

- Employ and reward employees who speak up about the emperor’s new clothes at all levels—decisions affecting safety, productivity, customer service, new initiatives, or the small daily effects of news they may have that you do not.

- If you are an executive, hire advisors whose job it is to provide you with news, information that is “negative” but truthfully constructive news, or the “bad news” along with the good.

- When making decisions, listen to well-informed professionals who do not have a personal stake (such as self-justification) in a previously made decision and/or investment of time and/or money (sunk costs).

- Hired employees or consultants always have some stake, some sunk costs, but sometimes if constructed correctly, such monitors can do a very good job of identifying the spin you are making out of your own and/or the organization’s past history. When such people are found, be sure to let them know you like them “just the way they are.”

- Remember that “out of the box” thinking can quickly become “in the box” thinking if novel approaches to problem solving are ignored or discouraged.

- Don’t rely on making decisions from a “gut feeling” or the “way you’ve always made decisions.”

Probability matching: There’s got to be a catch! Even when told which choices will definitely bring the best results, people want to test the odds. Often, a fact really is a fact. “This [finding] indicates that more optimal choices can be eventually attained over a number of trials without actually telling people that they should choose the more likely outcome on every trial. However, in real life, how many bad decisions does that entail?”

Sunk-cost Effect (associated with self-justification): “We’ve already gone this far.” “Look, I made the decision and I’m seeing it through!” “Do you know the amount of money we’ve invested in this project?” “Some research shows that the sunk-cost effect is just as persistent in groups as it is in individuals, leading to speculation about its contribution to the downfall of past human societies.”

Whenever advised about making personal change, remember the extraordinary effect of past success on future behavior—patterns of decision making are often sustained long after you find they are not working because you may not associate, necessarily, the process you go through with the sometimes remote impact. You may think that what happened was accidental or due to those other folks—and it may well have been.

The process of each of us is to identify typical decision dilemmas as the list above highlights and work to understand how we treat these conditions as a “checklist” in the process of deciding. Once we get fluent at analyzing our own process, we can then begin to see where we need to change. Get a buddy, a trusted advisor to force you to go through the list—to test assumptions, to seek contrary information, and to respond to new information in an informed, not simply explanatory or discounting way. Use a decision tool that lists these typical fallacies and check against the list.

If you are part of a team, make your decision-making process an open one. Put words to those elements and work to understand among yourselves how you are handling various parts of decision making, including the addition of new information that might force a different conclusion than what you really believe or want to believe. Then you are better able to tackle the mountain of history for doing what you have done with reasonable success for all these years.

Any new behavior, like a commitment to use a list, disappears quickly without arranging very systematic conditions to prompt and sustain that new behavior. A new approach to decision making—and checking how others see it, how different information might shape it, and how your own biases and prior success may shape it—requires practice and review, practice and review. Do not shortchange these activities. Like learning the piano without proper instruction, you may overlook a subtlety that leads you to play at a pace that is discordant to the trained ear.

Any new behavior, like a commitment to use a list, disappears quickly without arranging very systematic conditions to prompt and sustain that new behavior. A new approach to decision making—and checking how others see it, how different information might shape it, and how your own biases and prior success may shape it—requires practice and review, practice and review. Do not shortchange these activities. Like learning the piano without proper instruction, you may overlook a subtlety that leads you to play at a pace that is discordant to the trained ear.

Your history of learning is the key! No matter how bright human beings are, we are synthesizers to a large extent, trying to make sense of our world, and reinforced by our own perceived success in life by the way others respond to us and how events unfold. We are full of patterns about decisions and how we read the world around us. The decisions we make may sound like a harmonious whole—but they may at times be full of small discordant notes until the decisions we make are so loudly off-key that even we, and those around us, cannot avoid the noise they produce.

Understand the science of behavior analysis. Consider that you are not wise, but only “lucky” once in a while—unless you have been applying these lessons along the way. Work on wisdom in decisions. A modest review of your current success as a decision maker against these known flaws may help—and lead to a sigh of relief that you have done as well as you have—that any of us do as well as we do.

Know this truth: Don’t make a very big decision that is wrapped in the spun cloth of your prior decisions.

Begin to manage your decisions. Arrange the conditions that precede a decision and those that follow to ensure you do not fall into your own “understanding.” Be your own best skeptic and surround yourself with good people who keep their eyes wide open.

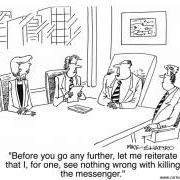

Now that you know how many missteps can occur when making a decision, you may feel paranoid, if not paralyzed by the information. Help is on the way. When charged with making a difficult or pivotal decision, Dr. Aubrey Daniels’ Five-step Model*, a behavioral approach to problem solving and designing successful behavior change can help. While it will not help you escape your particular history of reinforcement for doing what you do without very deliberate work, it can help ensure that you’ve considered all elements required to jump-start new patterns of behavior. When approaching a decision take the following steps:

1) Pinpoint: Define the specific result you want as the outcome of your decision. Consider the why, what, and how of getting to those outcomes. Determine the behaviors that you and others must take to drive this result and weigh the investment carefully. Understand every element involved to the degree you can to ensure success or to make progress toward your goal. Do so in specific and visible ways. When appropriate, write any concerns down and discuss. Stay with what you can see on the road ahead as well as doing a “what if” analysis of possible barriers. Be prepared to address those barriers when and if they arise. Pinpoint how you will respond to contrary evidence, including an adaptive process or amendment to your plan.

1) Pinpoint: Define the specific result you want as the outcome of your decision. Consider the why, what, and how of getting to those outcomes. Determine the behaviors that you and others must take to drive this result and weigh the investment carefully. Understand every element involved to the degree you can to ensure success or to make progress toward your goal. Do so in specific and visible ways. When appropriate, write any concerns down and discuss. Stay with what you can see on the road ahead as well as doing a “what if” analysis of possible barriers. Be prepared to address those barriers when and if they arise. Pinpoint how you will respond to contrary evidence, including an adaptive process or amendment to your plan.Remember Ralph Waldo Emerson’s words as you go down this path: “A foolish consistency is the hobgoblin of little minds, adored by little statesmen and philosophers and divines.”

Now make a decision, already!

For more information on coaching plans for decision-making strategies and problem solving, and workshops on this topic, contact ADI.

It is with great sadness that ADI shares the passing of Dr. Fantino on September 23, 2015. His ever-buoyant spirit, in the face of his 30 year battle with cancer, continues to serve as an example for how we all should live our lives, each and every day.

Edmund Fantino received his B.A. in mathematics from Cornell University in 1961 and his Ph.D. in experimental psychology from Harvard University in 1964. After serving as Assistant Professor at Yale University (1964-1967) he moved to the University of California, San Diego (in 1967) where he was named Distinguished Professor of Psychology and the Neurosciences Group and where he won awards for distinguished teaching. His research interests centered on decision-making. He developed delay-reduction theory, applied choice methodology to explore experimental analogues to foraging behavior, and extended behavioral principles and methodology to the study of human reasoning, choice (including gambling and self-control), and problem-solving. He authored three books: Introduction to Contemporary Psychology (Fantino & Reynolds, 1975), The Experimental Analysis of Behavior: A Biological Perspective (Fantino & Logan, 1979) and Behaving Well (Fantino, 2007), an account of his use of behavioral principles to assist in a 22-year-old confrontation with prostate cancer. He served as Editor of The Journal of the Experimental Analysis of Behavior (1987-1991) and is past President of the Society for the Experimental Analysis of Behavior.

Some recent papers that explore the study of choice described in this article as conducted in Drs Fantino and their students (and the issues in this article) are:

Fantino, E. (2004). Behavior-analytic approaches to decision making. Behavioural Processes, 66, 279-288.

Fantino, E, Jaworski, B.A., Case, D.A., and Stolarz-Fantino, S. (2003). Rules and problem solving: Another look. American Journal of Psychology, 116, 613-632.

Fantino, E., Kanevsky, I.G. and Charlton, S. (2005). Teaching pigeons to commit base-rate neglect. Psychological Science, 16, 820-825.

Fantino, E., and Kennelly, A. (2009). Sharing the wealth: Factors influencing resource allocation in the sharing game. Journal of the Experimental Analysis of Behavior, 91, 337-354.

Fantino, E., and Silberberg, A. (2010). Revisiting the Role of Bad News in Maintaining Human Observing Behavior. Journal of the Experimental Analysis of Behavior, 93, in press.

Fantino, E., and Stolarz-Fantino, S. (2002). The role of negative reinforcement; or: Is there an altruist in the house? Behavioral and Brain Sciences, 25, 257-258.

Fantino, E., Stolarz-Fantino, S., and Navarro, A. (2003) Logical fallacies: A behavioral approach to reasoning. The Behavior Analyst Today, 4, 102- 110.

Goodie, A.S., and Fantino, E. (1996). Learning to commit or avoid the base- rate error. Nature, 380, 247-249.

Navarro, A. D., and Fantino, E. (2005). The sunk cost effect in pigeons and humans. Journal of the Experimental Analysis of Behavior , 83, 1-13.

Navarro, A. D., and Fantino, E. (2009). The sunk-time effect: An exploration. Journal of Behavioral Decision Making, 22, 252-270.

Stolarz-Fantino, S., Fantino, E. & Van Borst, N. (2006). Use of base-rates and case-cue information in making likelihood estimates. Memory & Cognition, 34, 603-618.

Stolarz-Fantino, S., Fantino, E., Zizzo, D.J., and Wen, J. (2003).The conjunction effect: New evidence for robustness. American Journal of Psychology, 116, 15-34.

Suggested Readings

Arkes, H.R., & Blumer, C. (1985). The psychology of sunk cost. Organizational Behavior and Human Decision Processes, 35, 124-140.

Bloomfield, R. "behavioural finance" (2009).The New Palgrave Dictionary of Economics. Second Edition. Eds. Steven N. Durlauf and Lawrence E. Blume. Palgrave Macmillan (2008). The New Palgrave Dictionary of Economics Online. Palgrave Macmillan.

Fantino, E. & Esfandiari, A. (2002). Canadian Journal of Experimental Psychology/Revue canadienne de psychologie expérimentale, Vol 56 (1), 58-63.

Goltz, S.M. (1992). A sequential learning analysis of continued investments of organizational resources in nonperforming courses of action. Journal of Applied Behavior Analysis, 25, 561-574.

Goltz, S.M. (1999). Can’t stop on a dime: The roles of matching and momentum in persistence of commitment. Journal of Organizational Behavior Management, 19, 37-63.

Tversky, A., & Kahneman, D. (1982). Evidential impact of base rates. In D. Kahneman, P. Slovic, & A. Tversky (eds.), Judgment under uncertainty: Heuristics and biases (pp 153-160), Cambridge, U.K.: Cambridge University Press.

Navarro, A. D. & Fantino, E. (2005). The sunk cost effect in pigeons and humans. Journal of the Experimental Analysis of Behavior, 83, 1-13.

Navarro, A.D. & Fantino, E. (2007). The role of discriminative stimuli in the sunk-cost effect. Mexican Journal of Behavior Analysis, 33, 19-29.